Nobody likes to think about their technology not working. But when your business in on the line, that’s exactly where your head should be. In the world of infrastructure, there’s one universal truth. Technology fails. And if it hasn’t already, it’s a matter of time.

So, regardless of what you pay for hosting, it’s advisable to put in place a built-in safety net for when things go wrong.

And that safety net is server redundancy.

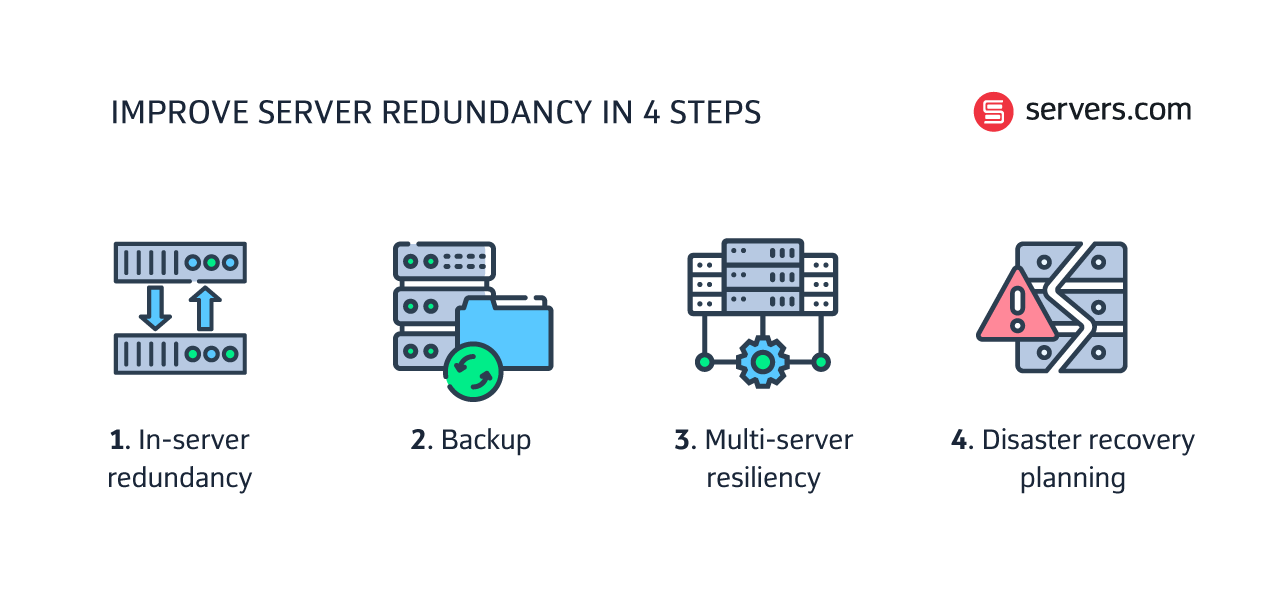

In this blog, we discuss what server redundancy is and share four ways to improve redundancy within your infrastructure ecosystem. Starting with in-server redundancy and working through to full-scale disaster recovery, we’ll provide some tips for how to mitigate the risk of server failure and achieve infrastructure resiliency.

Server redundancy means putting in place redundant systems to prevent against data loss and/or server failure. For example, by setting up one or more backup servers to support a primary server. If the primary server fails, a redundant server will take over so your website or application stays online.

It’s a common misconception that choosing a state-of-the-art data center is enough to guarantee rock solid infrastructure. In reality server redundancy depends much more on the design of an individual server set up.

Installing RAID (Redundant Array of Independent Disks) is one of the simplest things that a platform can do to improve the resiliency of its infrastructure stack. RAID is a data storage system that can be implemented at the software or hardware level to provide both redundancy and performance benefits.

There are many different RAID levels available (RAID 0, 1, 5, 6, and 10 are the most widely used). All RAID levels work by storing data across multiple drives, but the method varies. Because of these differences, specific RAID levels will be better suited to certain jobs depending on an application’s individual requirements.

For more information about the different RAID levels, you can consult the table below.

|

Level |

Method |

Pros |

Cons |

Use cases |

|

RAID-0 |

Data is divided into blocks and spread across multiple drives, through a process called disk striping . |

Fast read and write speeds. Writing and performance benefit is equal to the number of drives. |

Minimal protection against data loss. If any drive fails, the data set will be corrupted. |

Best suited to non-critical data storage requiring high read/write speeds. |

|

RAID-1 |

Data is replicated across two or more drives, through a process called mirroring. |

Data can be easily recovered. Mirrored drives are exact replicas, meaning that if either should fail the other will handle the load. Read and write speeds are equal to a single drive. |

Effective storage capacity is half the total drive capacity (because all data is written twice). A hot swap of a failed drive is not always possible. |

Best suited for mission-critical storage and small servers with two data drives. |

|

RAID-5 |

Data is striped across drives (minimum 3). A parity checksum of all the block data is written on one drive and spread across all drives. |

Can withstand a single drive failure without losing data access or incurring data loss. Fast read speeds. |

If more than one drive fails, the data set will be corrupted. Even though single disk failures are recoverable, it can take over 24 hours to restore the data (RAID-5 systems remain vulnerable to data loss during this time). |

Best suited to file and application servers with a limited number of drives. |

|

Works just like RAID-5, but with a minimum of 4 drives and parity data written on two drives instead of one. |

More secure than RAID-5. Can withstand two drives failing simultaneously. Fast read speeds. |

Slower write speeds due to additional parity data. Rebuilding an array after a drive failure can be time consuming. |

Best suited to file and application servers with many large drives. |

|

|

RAID-10 |

Combines RAID-1 and RAID-0 to form a mirrored stripe. Data is mirrored across multiple drives (paired in multiples of two) with additional striping to increase read speeds. |

Data from a failed drive can be recovered quickly. Rebuild time is very fast since all that is required is to copy data from a mirror drive to a new drive. Can technically withstand the loss of up to half of the total disks in a rack (provided failed disks are each from a different disk pair). |

Can be expensive since half of the total storage capacity goes towards mirroring. |

Best suited to I/O-intensive applications and organizations that especially need to mitigate downtime. |

Using an online RAID calculator can assist with RAID planning by allowing you to calculate the capacity, speed gain, and fault tolerance of your storage array based on inputted RAID parameters (number of disks, single disk size, RAID type).

Other factors that boost in-server redundancy include:

Dual power supply unit (PSU). As the name suggests, this means having two power supply units on your server. So, if one power supply should fail, the other can take over.

Investing in stronger network redundancy. Activating additional network switches on your server ensures that if one switch fails, a redundant switch takes over and the network remains operational.

Even high performance servers need redundancy built in. Combining all these measures will make a single server as redundant as it can be. However, to increase redundancy further, businesses need additional capacity beyond the single server in the form of backup.

To achieve higher levels of infrastructure redundancy, the data on a single server should be backed up in a separate backup server or storage device. The backup server can be in the same data hall, a different data hall or in a physically diverse location (a secondary site).

Respectively, each of these options offer stronger levels of redundancy.

The backup server or storage device should be updated consistently (hourly, daily, weekly) and will form a copy of the application’s data. If the primary server goes down, there is a safe copy of that data and its version history on the backup server or storage device.

Backups protect against physical faults at the server level, developer errors, and (in the case of secondary site backups) environmental threats like fires within the data hall. However, even with backups in place it can take weeks to get an application back online after an incident.

Some platforms choose to handle their resiliency by using multiple dedicated servers. In these instances, placing a load balancer between two, or more, application servers will help to improve server redundancy.

Load balancing distributes traffic across multiple servers so if one of the servers goes down there is another keeping the website or application running. Load balancers enable applications to scale beyond the capacity of a single server.

By contrast, if you’re looking to improve the resiliency of your database software, you will need to provision additional database servers as a high availability (HA) active-passive pair. Active-passive availability means that the database has an active node that can process requests and a hot spare that can take over in a disaster.

It doesn’t matter if you have your infrastructure hosted by the cheapest or the most expensive provider, in the worst rated or the best rated data center. If you’re serious about mitigating risk, you should have a disaster recovery plan.

A disaster recovery plan is a formal procedure created by an organization for dealing with unplanned incidents. An infrastructure disaster recovery plan will include measures for dealing with emergencies like physical building damage, cyber-attacks, server failure, hardware failure, and other hardware issues.

When creating a disaster recovery plan, there are two critical parameters. These are your Recovery Time Objective (RTO) and Recovery Point objective (RPO).

RTO: this is a measure of the duration of “real time” that an application can be down before causing significant damage to a business. It’s a threshold for how long a business can survive without its infrastructure. Mission-critical applications will have a very short RTO whereas less critical applications can often afford a longer RTO.

To calculate your RTO, you’ll need to identify how much downtime your business can afford, your budget for system restoration, and the tools needed to achieve a full system restoration.

RPO: This is a time-based measure of the maximum amount of data that a business can afford to lose after an unplanned incident. RPO is effectively the maximum acceptable amount of data-loss and is measured in terms of the time elapsed since the most recent reliable data backup.

Larger organizations typically require backup from the point of failure. To calculate your RPO you’ll need to identify how often your critical data is updated, the frequency of your backups, and your storage capacity for backups.

Nobody wants to think about the worst-case scenario and even fewer people want to pay to cover a server failure that may or may not happen. And because of this, many organizations neglect disaster recovery planning. But even the best technology is vulnerable to failure so both RTO and RPO are essential to ensure rapid recovery after an unplanned incident.

Prior planning prevents poor performance. And that also applies to your infrastructure. Whether we like it or not, hardware issues will rear their head, server failure will happen, and environmental hazards exist.

Putting in place measures to improve infrastructure redundancy means that when the worst happens, you’ll have the resources in place to keep your website or application online.

Want to achieve infrastructure resiliency? Get in touch and we will be happy to assist you.

Last Updated: 17 September 2023

Frances is proficient in taking complex information and turning it into engaging, digestible content that readers can enjoy. Whether it's a detailed report or a point-of-view piece, she loves using language to inform, entertain and provide value to readers.