Concepts

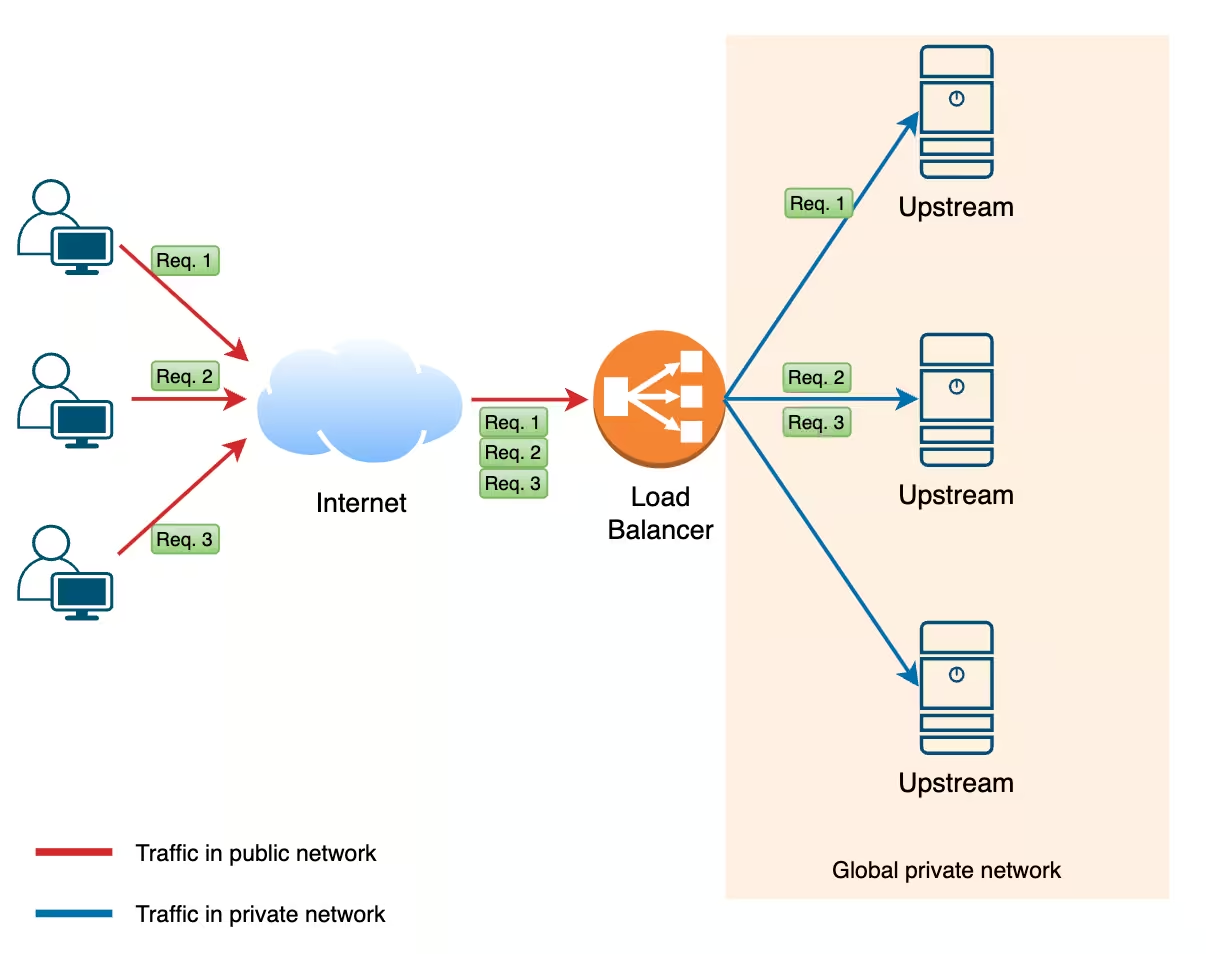

A load balancer is responsible for distributing the workload among upstreams for either dedicated or cloud servers.

Key functions:

- Distributing traffic among upstreams

- Enhancing fault tolerance and redundancy

- Ensuring scalability without system downtime

How it works

|

Component |

Description |

|

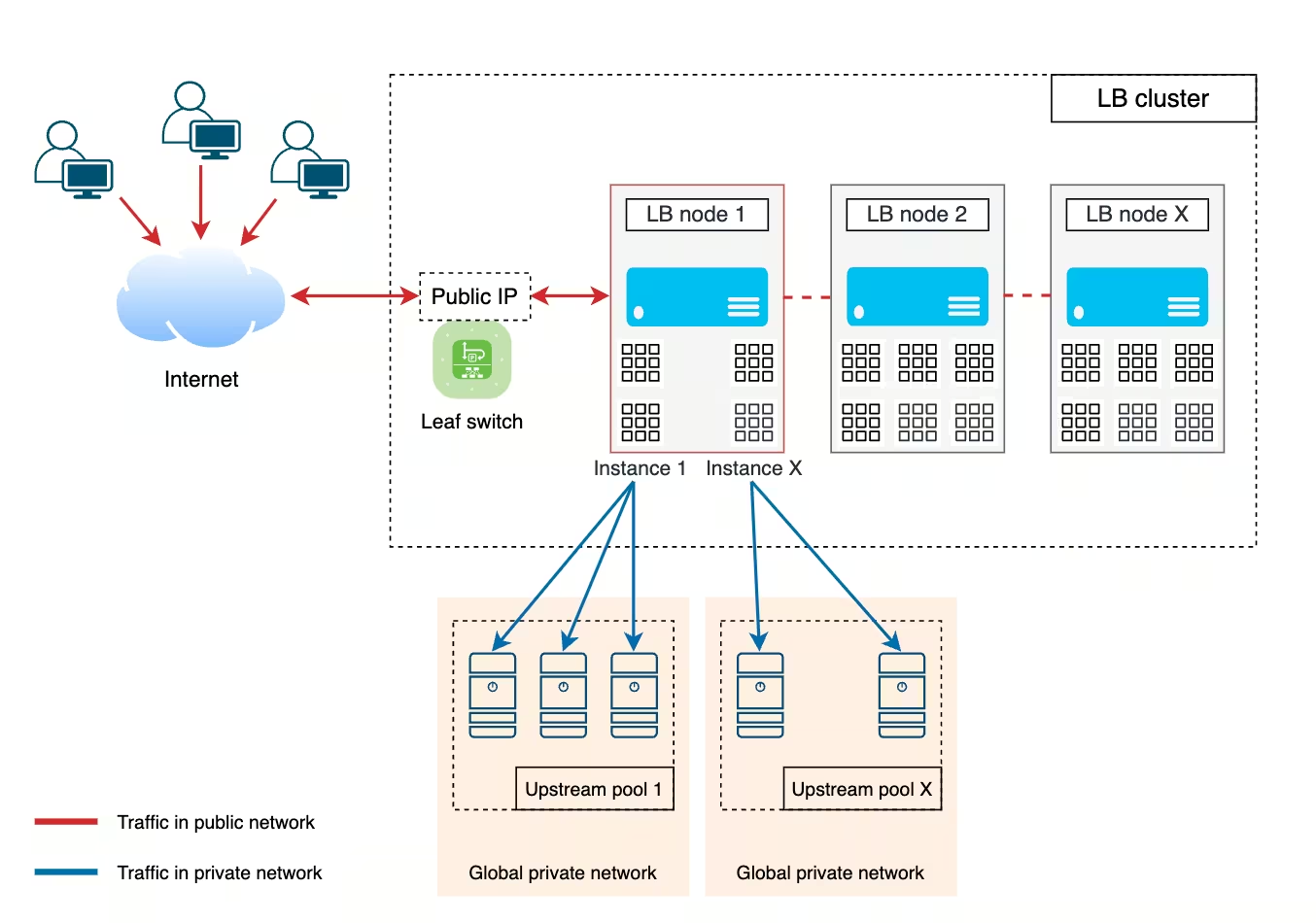

Load balancing cluster |

A pool of at least three load balancer nodes and switches. There are two types of cluster:

|

|

Load balancing node |

A pre-configured physical server running the NGINX Plus system. Each node, along with pre-configured switches, handles incoming traffic and distributes requests to upstream servers. If a node fails, it is replaced by another using clustering mechanisms. |

|

Upstream servers |

A pool of cloud or dedicated servers among which the traffic load is distributed. |

|

Load balancing instance |

A set of NGINX configurations on a load balancer node, linked to a specific pool of upstream servers. Each client is allocated an isolated load balancer instance on a node. These instances are individually configured, providing isolated and customizable load balancing within the overall infrastructure. |

|

Public IP |

Is the entry point for client traffic and is used by all nodes in the cluster. |

The load balancer serves as the entry point for client requests, isolating the upstream server pool from direct client access. Our load balancer is based on NGINX Plus software, an enhanced commercial version of NGINX. The service operates on a complex system of interconnected components. The key components are outlined below.

Key components

The load balancer utilizes BGP (Border Gateway Protocol) and XDP (eXpress Data Path) solutions:

- BGP is a standardized protocol for exchanging routing information between systems

- XDP is a framework for fast packet processing in the Linux kernel

Depending on the cluster, XDP or ECMP (Equal-Cost Multi-Path Routing) may be utilized to optimize traffic routing and enhance performance.

Using the assigned public IP address, the load balancer instance accepts client requests and distributes them among available upstream servers. The distribution is performed based on the health and state of the servers, as well as the distribution algorithms.

Algorithms

A load balancer uses algorithms to determine how requests are distributed among upstream servers.

Our L4 and L7 load balancers use the following algorithms:

- Random least connections. Routes the request to two servers randomly chosen from the entire pool and selects the server with the least number of active connections

- Weighted least connections. Routes requests to the server with the fewest active connections, with the ability to assign priorities to upstream servers based on weight

- Round robin. Distributes requests sequentially in a cyclic order among servers in the pool

Types of load balancing

We offer two types of load balancing based on traffic type, aligned with the OSI model. Both types of load balancers support storing logs in cloud storage that saves information about requests processed by the load balancer into log files in the NGINX format.

L7 load balancer (HTTP/HTTPS)

Works as a reverse proxy server, suitable for working with web applications and APIs that require content-based routing.

Key features:

- Operates at the application layer (layer 7) of the OSI model

- Supports protocols HTTP, HTTPS, and HTTP/2

- Enables uploading and managing SSL certificates for processing HTTPS requests

- Supports SSL termination, handling encryption and decryption of HTTPS traffic

- Verifies the availability of upstream servers using health checks feature with protocols such as HTTP, HTTPS, gRPC, and gRPCS

- Routes traffic based on the content of HTTP/HTTPS requests, including headers, URIs, parameters, and methods (e.g., GET, POST)

- Implements session persistence (sticky sessions) to bind client requests to a specific server for the duration of the session

- Identifies the user's geolocation based on their IP address using the Geo IP feature

The L7 load balancer has the ability to pass the client’s real IP address to upstream servers using specific headers in HTTP requests. This allows upstream servers to see the original IP address of the request instead of the load balancer's IP address, which is important for analytics, logging, and access control.

The following options are available in the customer portal:

|

Setting |

Description |

|

Disabled |

The load balancer does not use headers to pass the real IP address. Upstream servers will see the load balancer's IP address |

|

X-Real-IP |

The load balancer extracts the real IP address from the |

|

X-Forwarded-For |

The load balancer uses the |

Additionally, the Trusted networks field is available, where you can specify trusted networks in CIDR format (e.g., X.X.X.X/16..32). Real IP addresses will only be accepted from these networks, enhancing security and preventing inaccurate data from unverified sources.

L4 load balancer (TCP)

L4 load balancers are designed for high-performance systems and applications operating at the TCP level, such as databases, file servers, VoIP, gaming, and more.

Key features:

- Operates at the transport layer (layer 4) of the OSI model

- Balances TCP traffic

- Routes traffic based on IP addresses and ports without inspecting packet content

- Supports proxy protocol, forwarding the client's real IP address to the upstream server. Without this, upstream servers only see the load balancer's IP address

- Verifies the availability of upstream servers using the health checks feature

Health checks

The load balancer regularly checks the status of each upstream server through health checks. It sends these checks at set intervals, using the specified port, protocol, and path. If a health check fails, the upstream is temporarily removed from the pool of available nodes. Once the upstream passes a subsequent health check, it is automatically reinstated into the pool.

The load balancer directs traffic only to healthy upstreams.

Geo IP

Geo IP is a load balancer feature that determines the geographic location of a client based on their IP address. It is useful for geographically distributed systems, enabling the following:

- Routing requests based on the client location

- Managing access (allow or restrict) to specific resources based on the client geographic location

- Optimizing the route of requests to reduce latency by directing requests to the nearest servers