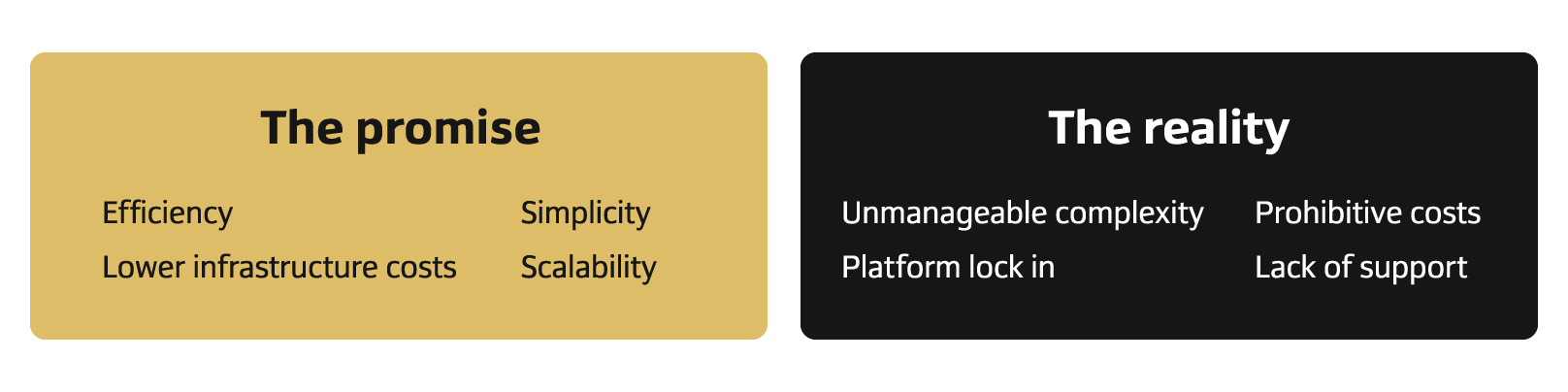

Hyperscale cloud is perceived as the simple and economical choice, but the reality is far more complex. Many businesses are drawn into hyperscale cloud environments by the promise of simplicity and free credits only to find themselves locked into financially unsustainable contracts by the time those free credits run out. “Cloud vendors tend to try and lock you into their ecosystem. If you do get locked in, then that’s great for them, but it's not great for you. You're just going to be limited in how you can scale”, comments Andrew Walker, Head of Business Development at Gameye.

Compute isn't infinite for any provider. Even AWS runs out of compute sometimes, so being able to access different sources of compute is important for scalability and redundancy. But for companies locked into these services, migrating away (even in part) can be exceptionally difficult. As Nagorny explains “as businesses integrate deeply with a particular cloud provider's services, migrating to another platform can be costly and time-consuming”.

This is something that Gameye found themselves all-too familiar with when working with game developers. “When it comes to vendor lock-in, we’ve had developers come to us going ‘we just ran out of credits, and our bill went through the roof. How can you help us keep this down?’”, shares Walker.

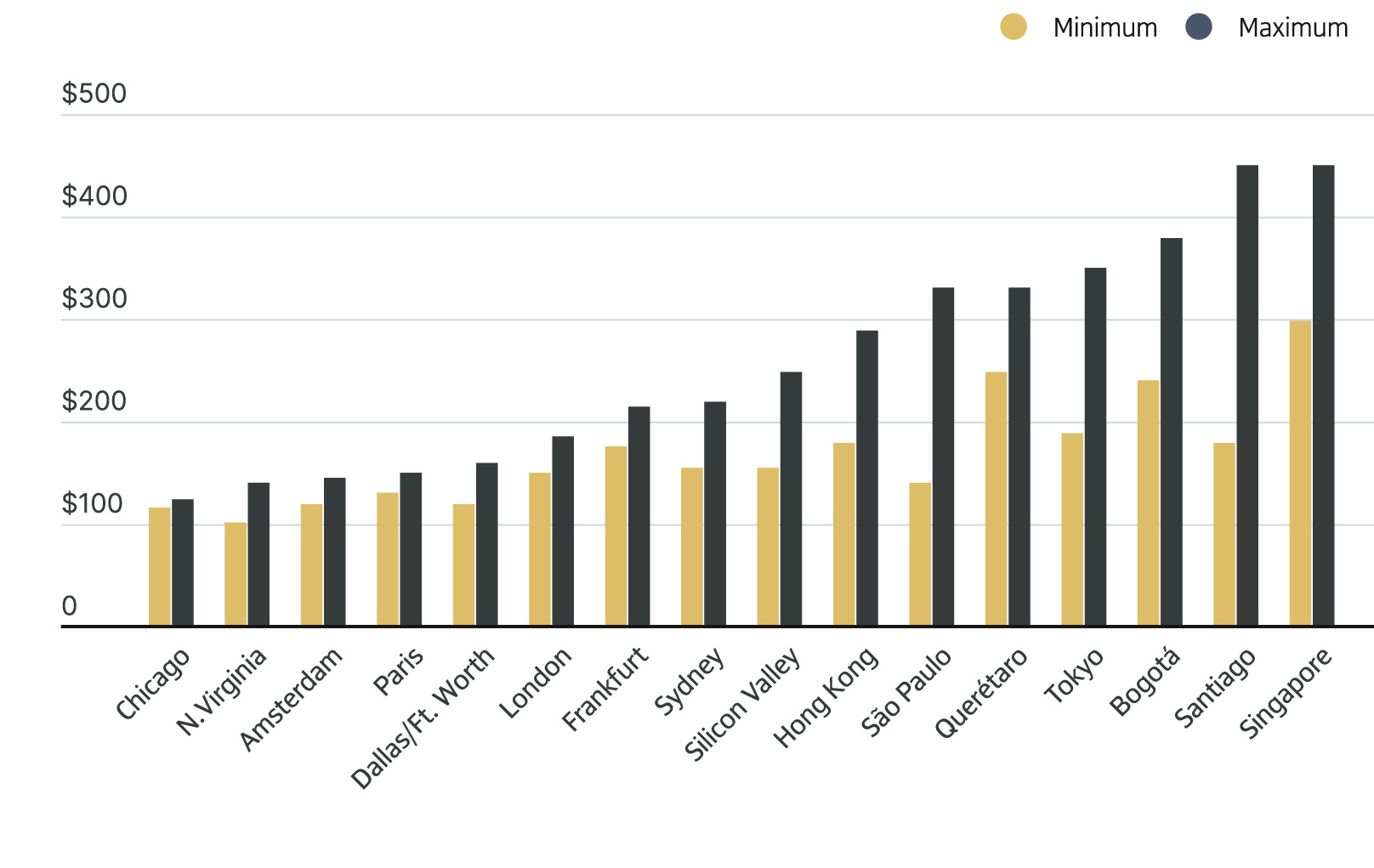

This issue is exacerbated further by the addition of data egress fees that many hyperscale cloud providers charge their customers to move data out of the cloud. The cost of these fees can vary depending on the volume of data, its origin, and intended destination. AWS, for instance, charges $0.09 per gigabyte of data for the first 10 terabytes and $0.02 per gigabyte for transferring data between EC2 instances in AWS in different regions.

Recent OFCOM investigations into the UK public cloud market also found egress fees to be significantly higher amongst the major players, posing significant barriers to customers wishing to migrate their data. And whilst there is movement on this issue (as of January 2024 Google Cloud became the first major cloud provider to eliminate egress fees for customers choosing to migrate data away from the Google Cloud Platform) it’s yet to be seen whether egress fees will be made reasonable across the board.

These obstacles to migration severely limit an organization’s access to alternative compute sources. The best approach is to avoid lock-in in the first place.

Why you need to be realistic about your scaling needs

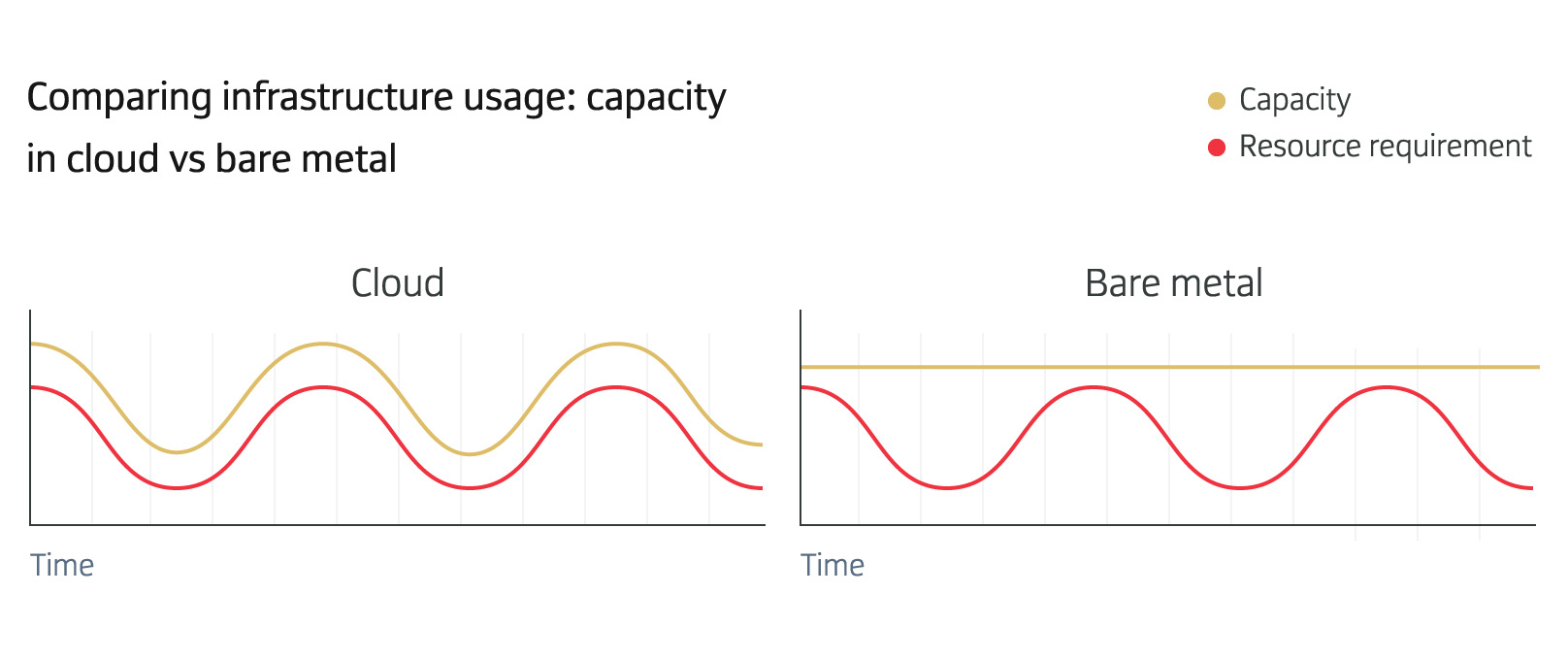

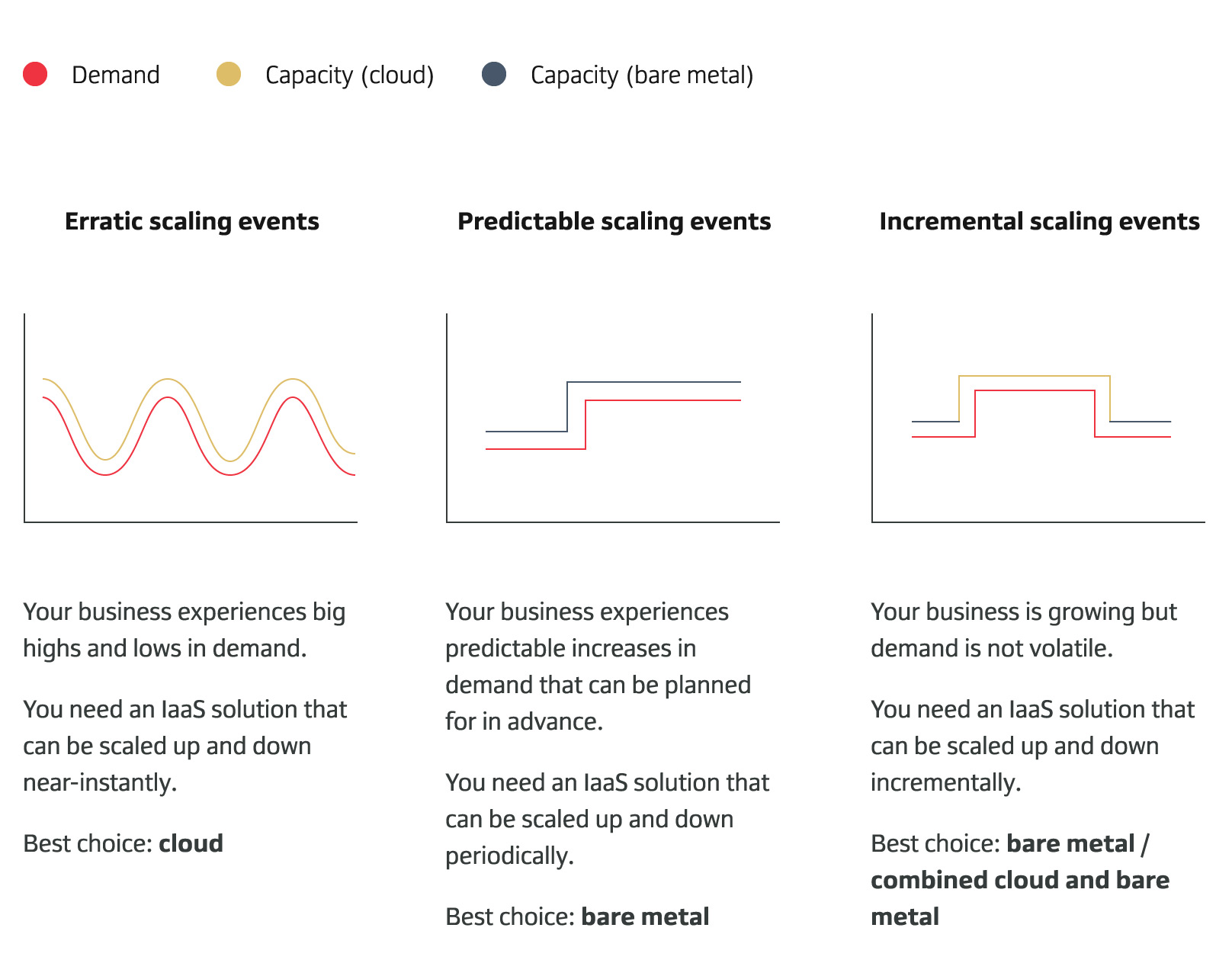

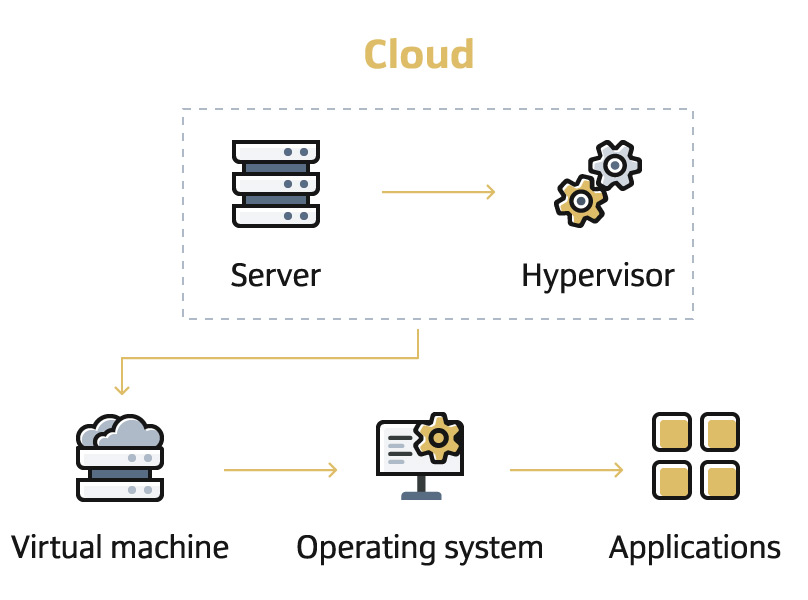

Hyperscale cloud is a great option for some businesses. For large-scale enterprise organizations that regularly experience huge peaks and troughs in demand, auto scaling (an AWS feature that continuously monitors an application and automatically increases resource capacity when demand spikes) is a significant advantage. Businesses like these need to be able to spin up additional virtual machines near instantaneously in accordance with daily usage curves. And that’s exactly what hyperscale cloud does best.

The issues arise when businesses that do not have these requirements still think they need hyperscale cloud. And misconceptions around infrastructure scalability are to blame. “They see a freedom there – unlimited resources”, explains the Global Director of Technology at an iGaming platform. But it can be difficult to understand whether that freedom is “fit for purpose”.

Every business has small peaks and troughs in daily usage, but the majority require incremental scaling at most.

So, whilst the cloud sells itself on the ability to scale in a near-instant manner whilst optimizing spend, for anyone who isn’t experiencing volatile demand, it’s probably not worth the premium.

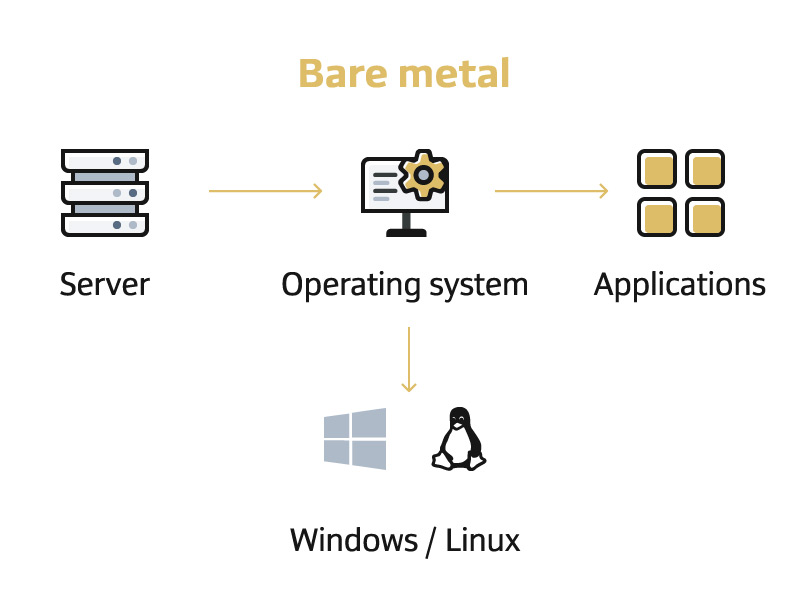

And when you consider that the cost of compute can be 10x compared to bare metal servers, added to the complexity of managing auto-scaling, that’s a lot of money to invest in something that wasn’t needed in the first place.